My original intent on Friday was to walk you through an intro to Natural Language Processing using the Stanford NLP program and coreNLP R package. By the way, for those who tried it and are having problems with initCoreNLP(), the comments below are specifically for you. (Here’s the link for the script.)

My original intent on Friday was to walk you through an intro to Natural Language Processing using the Stanford NLP program and coreNLP R package. By the way, for those who tried it and are having problems with initCoreNLP(), the comments below are specifically for you. (Here’s the link for the script.)

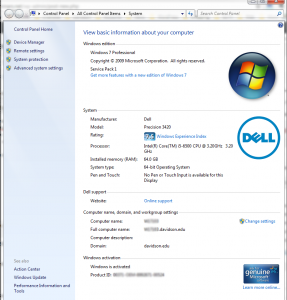

While I ran the Chapter 9 script successfully on my machine, I have 64GB RAM on my machine (my desktop is not a standard College computer, but one augmented for media production work), and the Chapter 9 script only ran when I set initCoreNLP(mem = “32g”). I assume that most of you don’t have 64 GB RAM on your machines, and when I tried to run it at 8 GB, the script ran into problems. You can actually get around it if you input the text directly (using the script, assigning the text via cut-and-paste to the sIn variable), but it was still slow when running the annotation.

So over the weekend, I compared two tutorials (one here, by Philip Murphy and the other here, by Kailash Awati), using a different R package called tm. It looked like it would be less resource-intensive, meaning that most laptops could handle the processing. As I was testing it out, I realized that I could do the data clean-up by hand, and while it was clumsy, I came out with better data (interactions between characters by scene) than I could by using NLP.

So here’s your challenge for today. In your groups, look at the script at the following site, the Gossip Girl Transcript for the Pilot. How would you begin to construct a social network from character interactions? There are many people who are working in this area (you can search for RoleNet or Character-Net), but for the purposes of this class (and actually working with data), some hands on data production may be useful.